Mass-mailing-system e-mail histories have put us over our Salesforce data storage limits.

Various admin tools say it's the task table hogging all the space. Indeed, when I exported the entire tasks table as a CSV via Apex Data Loader, it was a gigabyte large on my hard drive. So this was obviously the table that we needed to delete some records out of (archiving their CSV-export values on a fileserver we own), but it sure wasn't going to be an efficient file to play with in Excel.

This post brings you along on some of the miscellaneous play I did with the file. I'll be describing output, rather than showing it to you, as this is real company data and I'd rather not take the time to clean it up with fake data for public display.

First, I executed the following statements:

import pandas

df = pandas.read_csv('C:\\example\\tasks.csv', encoding='latin1', dtype='object')

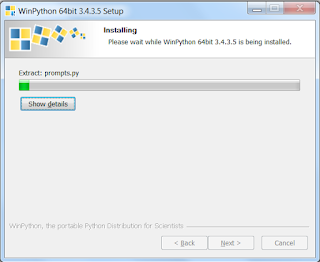

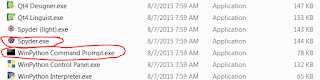

Then I commented out those lines (put a "#" sign at the beginning of each row) because that 2nd line of code had taken a LONG time to run, even in Python. I knew that Spyder, my "IDE" that came with "WinPython," picks up where the last code left off when you hit the "Run" button again, so in further experimentation, I was simply careful not to accidentally overwrite the value of "df" (that way, I could keep executing code against it).

The next thing I did was export the first 5 lines of DF to CSV, so they WOULD be openable in Excel, just in case I wanted to.

df.head().to_csv('C:\\example\\tasks-HEAD.csv', index=0, quoting=1)

I also checked the row count in my Task table, which is how I knew the CSV file had 1 million lines (I exported it yesterday and forgot the result from Apex Data Loader by today):

print(len(df.index))

Next, I interested myself in the subject headings, since I knew that Marketo & Pardot always started the Subject line of mass e-mails in distinctive ways. I just couldn't remember what those ways were.

For starters, I was curious how many distinct subject headings were to be found among the 50 million rows.

print(len(df['SUBJECT'].unique()))

7,000, it turns out.

Next, I wanted to skim through those 7,000 values and see if I could remember what the Marketo-related ones were called.

Just like filtering an Excel spreadsheet and clicking the down-arrow-icon that shows you all the unique values in alphabetical order gets a little useless when you have too many long, similar values, a Python output console isn't ideal for reading lots of data at once.

Therefore, I exported the data into a 7,000-line, 1-column CSV file (which would open easily & read well in a text editor like Notepad).

pandas.DataFrame(df['SUBJECT'].sort_values().unique()).to_csv('C:\\example\\task-uniquesubjects.csv', index=0, header=False)

Aha: Pardot used "Pardot List Email:" and Marketo used "Was Sent Email:" at the beginning of all subject lines in records that I considered candidates for deletion if they were over 2 years old.

(Note: if an error message comes up that says, "AttributeError: 'Series' object has no attribute 'sort_values'," your version of the Pandas plugin to Python is too old for the ".sort_values()" command against Series-typed data. Replace that part of the code with ".sort(inplace=False)".

I have two different business units willing to let me delete old e-mail copies, but they have different timeframes. One is willing to let me delete anything of theirs over 2 years old. The other is willing to let me delete anything of theirs over 1 year old. So now I needed to comb through the raw data matching my two subject heading patterns, looking for anything that would indicate business unit.

print(df[(df['SUBJECT'].str.startswith('Was Sent Email:') | df['SUBJECT'].str.startswith('Pardot List Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=2)))].head())

Unfortunately, nothing in any of the other columns gave me a hint which business unit had been responsible for sending the e-mail.

I knew that we were using Marketo long before Pardot ... if I just deleted Marketo outbound e-mails over 2 years old (the least common denominator between the two business units' decisions), how many rows would I be deleting?

print(len(df[(df['SUBJECT'].str.startswith('Was Sent Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=2)))]))

Hmmm. 85,000. Out of 1 million. Well ... not a bad start (about 85MB out of 1GB)...but I need to find more.

Come to think of it, how many is "both Pardot and Marketo, more than a year old," period?

print(len(df[(df['SUBJECT'].str.startswith('Was Sent Email:') | df['SUBJECT'].str.startswith('Pardot List Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=1)))]))

200,000. Out of 1 million. So now we're talking about getting rid of 200MB of data (the table is about 1GB), which is probably enough to buy us some time with Salesforce.

So our happy medium is somewhere in the middle, and we probably can't do much better than getting rid of 200MB of data given the business units' requests.

Maybe, though, it wouldn't be a bad idea to just start with the low-hanging fruit and get rid of that first 80MB of data or so (Marketo >2 years old).

Let's dive just a little deeper into what I think is "Marketo 2-Year-Old e-mail copies" to make absolutely sure that that's what they are.

First ... I when I printed the "head()" of Marketo e-mails, I noticed that there seemed to be some diversity in a custom field called "ACTIVITY_REPORT_DEPT__C." Let's see what we've got in there:

tempmksbj2yo = df[(df['SUBJECT'].str.startswith('Was Sent Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=2)))]

print(tempmksbj2yo['ACTIVITY_REPORT_DEPT__C'].unique())

"ERP API", "Marketo Sync," & "Student Worker

How many do we have of each?

tempmksbj2yo = df[(df['SUBJECT'].str.startswith('Was Sent Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=2)))]

print(tempmksbj2yo.groupby('ACTIVITY_REPORT_DEPT__C').size())

Good to know - there are just a few dozen rows that aren't "Marketo Sync." In future logic (as below), I'll further specify that a "Marketo" e-mail has this "Marketo Sync" trait.

Now let's take our records we want to delete from Salesforce and get them ready for doing so by exporting the raw data to CSV (for archiving on an on-premise file server) and by exporting the record IDs to a different CSV (for putting into "Apex Data Loader" as a DELETE operation). Let's also export to CSV what remains, to make it easier to pick up where we left off hunting for more deleteable records (this ".to_csv()" operation takes a LONG time to execute because it's a huge file!). And before doing all that (commented out the last line to keep it from running when first verifying this, since it takes so long to run), let's also verify whether the "remaining" file has the same size as the original file minus our "to delete" file.

mk2yologic = (df['SUBJECT'].str.startswith('Was Sent Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=2))) & (df['ACTIVITY_REPORT_DEPT__C'] == 'Marketo Sync')

mk2yo = df[mk2yologic]

remaining = df[~mk2yologic]

print('df size is ' + str(len(df.index)))

print('mk2yo size is ' + str(len(mk2yo.index)))

print('df - mk2yo size is ' + str(len(df.index) - len(mk2yo.index)))

print('remaining size is ' + str(len(remaining.index)))

mk2yo.to_csv('C:\\example\\task-marketo-2yo-or-more-deleting.csv', index=0, quoting=1)

mk2yo['ID'].to_csv('C:\\example\\task-marketo-2yo-or-more-idstodelete.csv', index=0, header=True)

remaining.to_csv('C:\\example\\task-remaining-after-removing-marketo-2yo-or-more.csv', index=0, quoting=1)

Next, it's on out of Python-land for a while, and into Apex trigger-editing (there's one from an AppExchange plugin that it helps to disable when deleting this many Tasks) and using the "Apex Data Loader" to execute the DELETE operation.

P.S. That didn't help enough. You know where I found another problem? E-mails that went out to a business unit's entire mailing list. 1 task per recipient per e-mail. Here's how I came up with a list of the (10) Subject-ActivityDate combinations that had the largest number of records:

rmn = df[~((df['SUBJECT'].str.startswith('Was Sent Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=1))) & (df['ACTIVITY_REPORT_DEPT__C'] == 'Marketo Sync'))]

print(rmn.groupby(['SUBJECT','ACTIVITYDATE']).size().order(ascending=True).reset_index(name='count').query('count>10000'))

Those 10 mailings represent 250,000 of our 1 million "Task" records, as verified here:

rmn = df[~((df['SUBJECT'].str.startswith('Was Sent Email:')) & (pandas.to_datetime(df['ACTIVITYDATE']) < (pandas.to_datetime('today')-pandas.DateOffset(years=1))) & (df['ACTIVITY_REPORT_DEPT__C'] == 'Marketo Sync'))]

print(rmn.groupby(['SUBJECT','ACTIVITYDATE']).size().order(ascending=True).reset_index(name='count').query('count>10000')['count'].sum())

Time to talk with some business users about whether this data really needs to live in Salesforce, even though it's recent...