In our last (huge!) post, we did a number of "conditional" row filters and edits (e.g. changing the value of the "email" field if someone's last name began with a certain letter).

In this post, we'll put two CSV files together (by putting two "DataFrames" representing them into a new "DataFrame" with data from both sources). This can replace VLOOKUP(...) or INDEX(MATCH(...)...) operations in Excel.

We'll just do exercises with 2 CSV files at a time, but to combine 3 or more, just combine them "2 at a time" in sequence.

(Combine #1 & #2, then combine the output of that w/ #3, etc.)

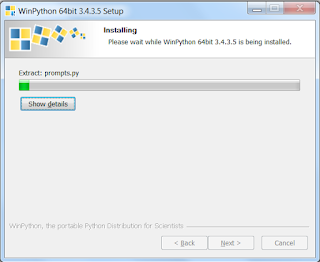

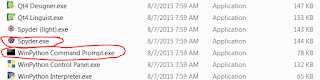

(Prep work: First, make sure you've created a ".CSV" file like the one described in "First Scripts". I called mine "sample1.csv". As in that post, in each example, we'll overwrite the contents of a piece of Python code saved to our hard drive as "script.py" and run the entire script.)

More prep work: create a 2nd CSV

Create another ".CSV" file with the following contents - I am calling mine "sample2.csv" and storing it in the same folder where I stored "sample1.csv":

"PersonId","FirstName","LastName","Em","FavoriteFood"

"983mv","Shirley","Temple","st@example.com","Lollipops"

"9e84f","Andrea","Smith","as@example.com","Kale"

"k28fo","Donald","Duck","dd@example.com","Pancakes"

"x934","Marilyn","Monroe","mm@example.com","Carrots"

"8xi","Albert","Howard","ahotherem@example.com","Potatoes"

"02e","Vandana","Shiva","vs@example.com","Amaranth"

Note the following traits about this 2nd CSV file:

- Some people are in both files, others are in just #1, & others are in just #2

-

None of the column names are spelled exactly the same way between the two CSV files. However, certain columns seem to express the same general idea (name, email)

-

That said, there isn't total consistency - the potato-loving "Albert Howard" in #2 has a different e-mail address from the "Albert Howard" in #1.

- Q: Is this additional contact information about the same real-world person or are we looking at two different real-world "Albert Howard"s?

- A: Tough call. This is for you to decide according to your knowledge of your data.

What's important is to realize that this is a business problem you must solve before telling the computer how to decide that two rows from two different CSV files "belong together."

(But you probably already knew that if you're an Excel CSV-joining whiz.)

- Other columns seem to be unique to one CSV or another in terms of actual meaning ("Company" is just in #1, "FavoriteFood" is just in #2, and "Id"/"PersonId" don't seem to have anything to do with each other with respect to people who are in both CSV files, so they are probably each unique to their respective CSV files as well.

- #1's "Id" & #2's "PersonId" columns don't really seem to have anything to do with each other, with respect to people who are in both CSV files

Merging Two DataFrames: Code Overview

Here's the basic way of writing a command for merging two DataFrames (the output from this command is yet another DataFrame):

ReferenceToDataFrameNumber1.merge(ReferenceToDataFrameNumber2, how='MergeTypeCode', on=['ColumnNameHere', 'AnotherColumnNameHere'])

First, pick one of our two DataFrames (doesn't matter which) and decide to call it the "left"-side DataFrame (its columns will come out on the left-hand side of the output DataFrame).

It could be the "nickname" where we've stored the contents of a DataFrame (such as "df1"), or it could be a chunk of code whose output is a DataFrame.

After that, we type ".merge()"

Inside the parentheses of the ".merge()" command, the first thing we type is a reference to our other DataFrame (which we will refer to as the "right"-side DataFrame).

Again, it could be the "nickname" where we've stored the contents of a DataFrame (such as "df2"), or it could be a chunk of code whose output is a DataFrame.

Then we type a comma and a space.

After that, still inside the parentheses of the ".merge()" command, we type "how='...'" where the "..." is one of the following 4 lower-cased words: "outer", "inner", "left", or "right".

- "Outer" means, "include every row from both DataFrames in the output, but put matching rows' data together on the same line."

- "Inner" means, "in the output, only include rows where the values from both DataFrames match."

- "Left" means, "include every row from the 'left-side' DataFrame in the output, and put data from the 'right-side' DataFrame on the same line if it has data that just so happens to match."

- "Right" means, "include every row from the 'right-side' DataFrame in the output, and put data from the 'left-side' DataFrame on the same line if it has data that just so happens to match."

Then we type a comma and a space.

Finally, we type "on=...".

- The "..." can be of the format "'JustOneColumnNameHere'", "['JustOneColumnNameHere']", or, to say that values must match across MULTIPLE column names at the same time (e.g. a first AND last name match) "['ColumnNameHere','AnotherColumnNameHere','AndSoOn']".

- However, the colum names specified in "on" must be spelled the same - all the way down to capitalization - between the two DataFrames.

So if the spelling between "equivalent" columns is different, as in our data, we're going to have to rename columns from one DataFrame or the other before passing it to the ".merge()" operation. We'll go over the code for that in an example later.

Important Pandas Note: Unlike with Excel VLOOKUP() or INDEX(MATCH()), in any type of Pandas ".merge()" operation, if multiple lines from the DataFrames being merged match each other, you'll get multiple lines of output.

It's actually more accurate than the way Excel handles things, but it takes some getting used to.

So an "inner" merge on DataFrames from these two CSV files using "on='Id'":

Input #1:

"Id","PastEmployer"

"1","RCA"

"2","United States Congress"

"2","Fox"

Input #2:

"Id","BelovedFood"

"1","Snow Peas"

"2","Carrots"

"2","Beets"

"2","Cookies"

Would produce output that looks like this. Note the 6 lines for ID #2 - that's 2 multiplied by 3 (2 lines from input #1, matching 3 lines from input #2):

"Id","PastEmployer","BelovedFood"

"1","RCA","Snow Peas"

"2","United States Congress","Carrots"

"2","United States Congress","Beets"

"2","United States Congress","Cookies"

"2","Fox","Carrots"

"2","Fox","Beets"

"2","Fox","Cookies"

Example With "Outer"

Code:

import pandas

df1 = pandas.read_csv('C:\\tempexamples\\sample1.csv', dtype=object)

df2 = pandas.read_csv('C:\\tempexamples\\sample2.csv', dtype=object)

print('---Contents of DataFrame "df1":---')

print(df1)

print('---Contents of DataFrame "df2":---')

print(df2)

newdf = df1.merge(df2.rename(columns = {'LastName':'Last', 'FirstName':'First', 'Em':'Email'}), how='outer', on=['Last', 'First'])

print('---Contents of DataFrame "newdf":---')

print(newdf)

Output text:

---Contents of DataFrame "df1":---

Id First Last Email Company

0 5829 Jimmy Buffet jb@example.com RCA

1 2894 Shirley Chisholm sc@example.com United States Congress

2 294 Marilyn Monroe mm@example.com Fox

3 30829 Cesar Chavez cc@example.com United Farm Workers

4 827 Vandana Shiva vs@example.com Navdanya

5 9284 Andrea Smith as@example.com University of California

6 724 Albert Howard ah@example.com Imperial College of Science

---Contents of DataFrame "df2":---

PersonId FirstName LastName Em FavoriteFood

0 983mv Shirley Temple st@example.com Lollipops

1 9e84f Andrea Smith as@example.com Kale

2 k28fo Donald Duck dd@example.com Pancakes

3 x934 Marilyn Monroe mm@example.com Carrots

4 8xi Albert Howard ahotherem@example.com Potatoes

5 02e Vandana Shiva vs@example.com Amaranth

---Contents of DataFrame "newdf":---

Id First Last Email_x Company PersonId Email_y FavoriteFood

0 5829 Jimmy Buffet jb@example.com RCA NaN NaN NaN

1 2894 Shirley Chisholm sc@example.com United States Congress NaN NaN NaN

2 294 Marilyn Monroe mm@example.com Fox x934 mm@example.com Carrots

3 30829 Cesar Chavez cc@example.com United Farm Workers NaN NaN NaN

4 827 Vandana Shiva vs@example.com Navdanya 02e vs@example.com Amaranth

5 9284 Andrea Smith as@example.com University of California 9e84f as@example.com Kale

6 724 Albert Howard ah@example.com Imperial College of Science 8xi ahotherem@example.com Potatoes

7 NaN Shirley Temple NaN NaN 983mv st@example.com Lollipops

8 NaN Donald Duck NaN NaN k28fo dd@example.com Pancakes

"sample1.csv" has 7 rows and "sample2.csv" has 6 rows. In this output, there are 9 rows because 4 rows from each CSV "match" a row from the other CSV exactly once (same first+last name) and can share a line in the output data, but 5 rows are unique to one CSV or the other, for a total of 9 output rows.

I printed the contents of DataFrames I nicknamed "df1" & "df2" in the output, but the area to focus on is the output of the DataFrame we saved by the nickname "newdf" and the code on line 8 that produced it.

There's a lot to go over here, so let's dive in.

First - remember that note from above saying that the column names needed to match? Instead of just passing in the nickname "df2" as a reference to a "right-side" DataFrame in the beginning of the ".merge()" parentheses, I passed in a code that generates a new DataFrame.

"df2.rename(columns = {'LastName':'Last', 'FirstName':'First', 'Em':'Email'})" produces a dataframe almost exactly like the one stored under the nickname "df2," only its "LastName," "FirstName," & "Em" columns have been renamed to match the naming of columns from the DataFrame stored under the nickname "df1."

That's the main tricky thing I did!

There's a lot of code to read on line 8, but if you think of that entire chunk before the first comma (",") as one big reference to a DataFrame to merge with "df1," you'll see that it still follows the normal pattern that the ".merge()" command requires us to follow.

I set the "how" parameter in line 8 to "'outer'", so our output file includes data from both spreadsheets, with blanks ("NaN") filling in the gaps where we don't know data about someone (because they weren't in the spreadsheet containing that data).

Finally, although I renamed 3 columns as if I were going to merge on them all, in the end, I decided that I just wanted to "match" on First Name & Last Name. I decided I was okay with the e-mail addresses being different.

Note that the "on=" columns got combined (the output only has 1 "First" column and 1 "Last" column), but the other columns that coincidentally had the same name in both inputs (that is, "Email") did not. To help you tell them apart, Pandas renamed them "Email_x" (from the left side) and "Email_y" (from the right side) in the output.

Examples With "Inner", "Left," & "Right"

Let's change just one thing in our code (besides not printing out df1 & df2): let's change "outer" to "inner."

Code:

import pandas

df1 = pandas.read_csv('C:\\tempexamples\\sample1.csv', dtype=object)

df2 = pandas.read_csv('C:\\tempexamples\\sample2.csv', dtype=object)

newdf = df1.merge(df2.rename(columns = {'LastName':'Last', 'FirstName':'First', 'Em':'Email'}), how='inner', on=['Last', 'First'])

print('---Contents of DataFrame "newdf":---')

print(newdf)

Output text:

---Contents of DataFrame "newdf":---

Id First Last Email_x Company PersonId Email_y FavoriteFood

0 294 Marilyn Monroe mm@example.com Fox x934 mm@example.com Carrots

1 827 Vandana Shiva vs@example.com Navdanya 02e vs@example.com Amaranth

2 9284 Andrea Smith as@example.com University of California 9e84f as@example.com Kale

3 724 Albert Howard ah@example.com Imperial College of Science 8xi ahotherem@example.com Potatoes

Of our 7 rows from "sample1.csv" and our 6 rows from "sample2.csv," only 4 rows from each share both a first and last name with a row in the other CSV.

Since this is an "inner" merge, we've said we only want the output to contain rows where the data has something in common.

---

Now let's change "inner" to "left."

Code:

import pandas

df1 = pandas.read_csv('C:\\tempexamples\\sample1.csv', dtype=object)

df2 = pandas.read_csv('C:\\tempexamples\\sample2.csv', dtype=object)

newdf = df1.merge(df2.rename(columns = {'LastName':'Last', 'FirstName':'First', 'Em':'Email'}), how='left', on=['Last', 'First'])

print('---Contents of DataFrame "newdf":---')

print(newdf)

Output text:

---Contents of DataFrame "newdf":---

Id First Last Email_x Company PersonId Email_y FavoriteFood

0 5829 Jimmy Buffet jb@example.com RCA NaN NaN NaN

1 2894 Shirley Chisholm sc@example.com United States Congress NaN NaN NaN

2 294 Marilyn Monroe mm@example.com Fox x934 mm@example.com Carrots

3 30829 Cesar Chavez cc@example.com United Farm Workers NaN NaN NaN

4 827 Vandana Shiva vs@example.com Navdanya 02e vs@example.com Amaranth

5 9284 Andrea Smith as@example.com University of California 9e84f as@example.com Kale

6 724 Albert Howard ah@example.com Imperial College of Science 8xi ahotherem@example.com Potatoes

There are 7 rows because that's how many rows "sample1.csv" (which became DataFrame "df1") had. 4 of those rows have "extra" data at right from "sample2.csv".

---

Now let's change "left" to "right."

Code:

import pandas

df1 = pandas.read_csv('C:\\tempexamples\\sample1.csv', dtype=object)

df2 = pandas.read_csv('C:\\tempexamples\\sample2.csv', dtype=object)

newdf = df1.merge(df2.rename(columns = {'LastName':'Last', 'FirstName':'First', 'Em':'Email'}), how='right', on=['Last', 'First'])

print('---Contents of DataFrame "newdf":---')

print(newdf)

Output text:

---Contents of DataFrame "newdf":---

Id First Last Email_x Company PersonId Email_y FavoriteFood

0 294 Marilyn Monroe mm@example.com Fox x934 mm@example.com Carrots

1 827 Vandana Shiva vs@example.com Navdanya 02e vs@example.com Amaranth

2 9284 Andrea Smith as@example.com University of California 9e84f as@example.com Kale

3 724 Albert Howard ah@example.com Imperial College of Science 8xi ahotherem@example.com Potatoes

4 NaN Shirley Temple NaN NaN 983mv st@example.com Lollipops

5 NaN Donald Duck NaN NaN k28fo dd@example.com Pancakes

There are 6 rows because that's how many rows "sample2.csv" (which became DataFrame "df2" and then its "renamed" version) had. 4 of those rows have "extra" data at left from "sample1.csv".

Example: Merging On Different Columns Than Before

Let's change just one thing in our code - we're going to say that a first-last name match isn't good enough.

We're going to tell our code to only merge people if first name, last name, AND e-mail match.

(In other words, treat the "Albert Howard" records as two different people since their e-mail addresses don't match.)

This code will go through all 4 examples in one piece of code, so to save typing...

- I gave that awful piece of code based on "df2" a nickname of "df2renamed"

- I gave my list of column-names to "match" on a nickname of "joincols".

(You can give code like "'zzz'" or "['yyy','zzz']" nicknames, too!)

Code:

import pandas

df1 = pandas.read_csv('C:\\tempexamples\\sample1.csv', dtype=object)

df2 = pandas.read_csv('C:\\tempexamples\\sample2.csv', dtype=object)

df2renamed = df2.rename(columns = {'LastName':'Last', 'FirstName':'First', 'Em':'Email'})

joincols = ['Last', 'First', 'Email']

newdfouter = df1.merge(df2renamed, how='outer', on=joincols)

newdfinner = df1.merge(df2renamed, how='inner', on=joincols)

newdfleft = df1.merge(df2renamed, how='left', on=joincols)

newdfright = df1.merge(df2renamed, how='right', on=joincols)

print('---Contents of DataFrame "newdfouter":---')

print(newdfouter)

print('---Contents of DataFrame "newdfinner":---')

print(newdfinner)

print('---Contents of DataFrame "newdfleft":---')

print(newdfleft)

print('---Contents of DataFrame "newdfright":---')

print(newdfright)

Output text:

---Contents of DataFrame "newdfouter":---

Id First Last Email Company PersonId FavoriteFood

0 5829 Jimmy Buffet jb@example.com RCA NaN NaN

1 2894 Shirley Chisholm sc@example.com United States Congress NaN NaN

2 294 Marilyn Monroe mm@example.com Fox x934 Carrots

3 30829 Cesar Chavez cc@example.com United Farm Workers NaN NaN

4 827 Vandana Shiva vs@example.com Navdanya 02e Amaranth

5 9284 Andrea Smith as@example.com University of California 9e84f Kale

6 724 Albert Howard ah@example.com Imperial College of Science NaN NaN

7 NaN Shirley Temple st@example.com NaN 983mv Lollipops

8 NaN Donald Duck dd@example.com NaN k28fo Pancakes

9 NaN Albert Howard ahotherem@example.com NaN 8xi Potatoes

---Contents of DataFrame "newdfinner":---

Id First Last Email Company PersonId FavoriteFood

0 294 Marilyn Monroe mm@example.com Fox x934 Carrots

1 827 Vandana Shiva vs@example.com Navdanya 02e Amaranth

2 9284 Andrea Smith as@example.com University of California 9e84f Kale

---Contents of DataFrame "newdfleft":---

Id First Last Email Company PersonId FavoriteFood

0 5829 Jimmy Buffet jb@example.com RCA NaN NaN

1 2894 Shirley Chisholm sc@example.com United States Congress NaN NaN

2 294 Marilyn Monroe mm@example.com Fox x934 Carrots

3 30829 Cesar Chavez cc@example.com United Farm Workers NaN NaN

4 827 Vandana Shiva vs@example.com Navdanya 02e Amaranth

5 9284 Andrea Smith as@example.com University of California 9e84f Kale

6 724 Albert Howard ah@example.com Imperial College of Science NaN NaN

---Contents of DataFrame "newdfright":---

Id First Last Email Company PersonId FavoriteFood

0 294 Marilyn Monroe mm@example.com Fox x934 Carrots

1 827 Vandana Shiva vs@example.com Navdanya 02e Amaranth

2 9284 Andrea Smith as@example.com University of California 9e84f Kale

3 NaN Shirley Temple st@example.com NaN 983mv Lollipops

4 NaN Donald Duck dd@example.com NaN k28fo Pancakes

5 NaN Albert Howard ahotherem@example.com NaN 8xi Potatoes

Note that there are now only 3 rows, not 4, that are considered to "match," because the two Albert Howard records have different e-mail addresses.

That means that there are now:

- 10 rows in "outer"

(3 "matches" and 7 "unique-to-one-or-the-other" rows, instead of 4 & 5)

- 3 rows in "inner"

(3 "matches")

- The same number of rows as before in "left" and "right," but no data filled in from "the other file" on Albert Howard's row in either

(because it wasn't considered "matching")

Style Notes

- I always like to save the results of ".merge()" commands to a new nickname. I tend to call it something like "joindf." So, "joindf = ..."

I've found that the ".merge()" code takes up so much space on the screen, I really don't want to combine too much more code with it. I prefer to make a clean break at this point, which is where nicknaming comes in handy.

- You may have noticed all my ".read_csv()" commands added ", dtype=object" inside the parentheses after the filename. I was getting errors when I merged my DataFrames - I think Pandas was trying a little too hard to decide whether values it was importing from CSV were numbers, plain text, etc - so this little piece of code said, "Don't worry about it. Just import what you see as plain text."

- Q: How did I guess my errors had to do with Pandas trying to figure out numbers vs. plain-text?

- A: Because the errors seemed to have something to do with Pandas trying to do "absolute value" operations against my data.

Whenever you have some data in your file that kind of looks numeric / date-like / etc, if you don't actually want to do math against it, a good way to fix errors it to just force your data to import as plain-text.

- I lied to you a little bit about having to rename columns before you merge two DataFrames. There is another way, and you might run into it online. But I don't like it. Nevertheless, I'll explain it.

Instead of "on=...", you have a "left_on=..." and "right_on=...", where each "..." is a single column-name or a list of column names (of the same size, in the "same order").

However, it produces ugly output, in my opinion. Your data from those columns ends up split across two different output columns, instead of being neatly under one same-named column.

Here's an example - I'll throw in some code we haven't covered in lines 5, 6, & 8 to re-order the columns and expose why I consider it "ugly." Note that in line 4, we just pass the ".merge()" operation a reference to "df2" itself (because in this case, we're not renaming its columns).

Take a look at how Jimmy Buffet's last name is in "Last," but Donald Duck's's last name is in "LastName":

Code:

import pandas

df1 = pandas.read_csv('C:\\tempexamples\\sample1.csv', dtype=object)

df2 = pandas.read_csv('C:\\tempexamples\\sample2.csv', dtype=object)

newdfugly = df1.merge(df2, how='outer', left_on=['Last','First'], right_on=['LastName','FirstName'])

def put_cols_at_left(referenceToDataFrame, listOfColumnNames):

return referenceToDataFrame[listOfColumnNames+[c for c in referenceToDataFrame.columns if c not in listOfColumnNames]]

print('---Contents of DataFrame "newdfugly" (with "last" & "first" columns moved to the left):---')

print(put_cols_at_left(newdfugly, ['Last','LastName','First','FirstName']))

Output text:

---Contents of DataFrame "newdfugly" (with "last" & "first" columns moved to the left):---

Last LastName First FirstName Id Email Company PersonId Em FavoriteFood

0 Buffet NaN Jimmy NaN 5829 jb@example.com RCA NaN NaN NaN

1 Chisholm NaN Shirley NaN 2894 sc@example.com United States Congress NaN NaN NaN

2 Monroe Monroe Marilyn Marilyn 294 mm@example.com Fox x934 mm@example.com Carrots

3 Chavez NaN Cesar NaN 30829 cc@example.com United Farm Workers NaN NaN NaN

4 Shiva Shiva Vandana Vandana 827 vs@example.com Navdanya 02e vs@example.com Amaranth

5 Smith Smith Andrea Andrea 9284 as@example.com University of California 9e84f as@example.com Kale

6 Howard Howard Albert Albert 724 ah@example.com Imperial College of Science 8xi ahotherem@example.com Potatoes

7 NaN Temple NaN Shirley NaN NaN NaN 983mv st@example.com Lollipops

8 NaN Duck NaN Donald NaN NaN NaN k28fo dd@example.com Pancakes

It's up to you, though. I sometimes use the "left_on" & "right_on" style when I'm merely experimenting with joining data and don't need any "pretty" output yet because it's just a little less text to type.

That's a good pausing point!

Please comment: what would you like to learn next?

Table of Contents